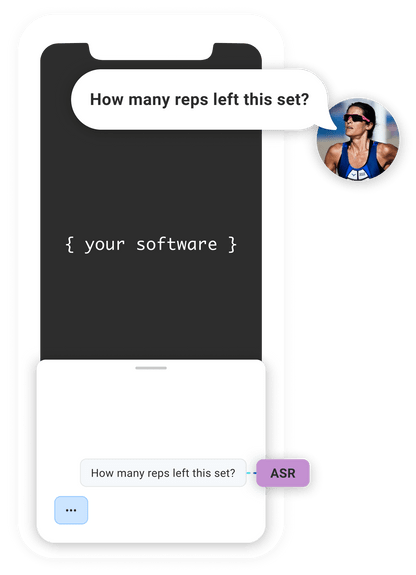

Automatic Speech Recognition

Analyze and transcribe your software’s audio to perform a function or simply record.

Get started free

What is Automatic Speech Recognition?

Automatic speech recognition, or ASR, refers to the process of analyzing and transcribing a chunk of audio without human intervention, producing text that software can process further, either to perform a function or simply to record it. This technology is ubiquitous with a place in the stack of every major voice assistant on the market.

How Does ASR Work?

Many different techniques have been used to accomplish this throughout ASR's long history, but modern models use — what else? —neural networks. The size and performance characteristics of these models vary widely, based on where they're designed to be deployed and their intended use cases.

Technology has advanced to the point where models small enough to fit on a mobile device and run in almost real time are accurate enough to use for many tasks, but models that run in the cloud are still widely used for their speed and relatively higher accuracy.

ASR models are trained on large, diverse collections of speech and corresponding transcripts. From this collection, they learn to recognize either individual phonemes, the smallest meaningful units of sound in a language, or characters of text. This is called anacoustic model.

To process user speech, acoustic models are often combined with language models, which are independently trained on large collections of text to learn the most common groupings of sounds and letters into words. When ASR is active, the acoustic model receives raw audio, translating it into a stream of whatever basic units (phonemes or characters) it was trained to produce. The language model receives this output and arranges it into the most likely stream of words in the target language.

Benefits of ASR

Some form of ASR is a must for any application that wants to process user speech—without it, there's no way to tell what the user said. All speech recognizers are not created equal, though, and there are many factors to consider when choosing an ASR for your app.

ASR accuracy is often measured in Word Error Rate, or WER, or the percentage of words that differ between the ASR system's transcript and that of a gold-standard version. Getting an accurate WER measurement means juggling many different variables (think accent, background noise, whether we're talking about a speech vs. a multi-party conversation, etc.), and it's easy to spin. There are, however, various academic comparisons of major vendors. For perspective, human WER hovers somewhere between 4-11%, depending on variables like those mentioned above, usually falling on the lower end of that range.

Tips for Choosing the Right Speech Recongizer

Be wary of anything advertised as performing better than humans; it was likely measured under conditions very favorable to the system.

Look for a vendor that has support for the languages your users speak. Truly multilingual ASR isn't widely available, but cloud ASR vendors often allow you to send a language code along with your audio to receive the type of results your app expects.

Some ASR providers will also allow you to customize the language model by providing a list of vocabulary specific to your app. This can be helpful if you're expecting to process a lot of technical jargon, or if you want to improve support for a relatively small collection of well-known commands or phrases used to communicate with your app. For very constrained vocabularies, you might also benefit from using a keyword model as your ASR system.